The recent introduction of the “Agent Summary” feature in SuperAGI version 0.0.10 has brought a drastic difference in agent performance – improving the quality of agent output. Agent Summary helps AI agents maintain a larger context about their goals while executing complex tasks that require longer conversations (iterations).

The Problem: Reliance on Short-Term Memory

Earlier, agents relied solely on passing short-term memory (STM) to the language model, which essentially acted as a rolling window of the most recent information based on the model’s token limit. Any context outside this window was lost.

For goals requiring longer runs, this meant agents would often deliver subpar and disjointed responses due to a lack of context about the initial goal and over-reliance on very recent short-term memory.

Introducing Long-Term Summaries

To provide agents with more persistent context, We enabled the addition of long-term summaries (LTS) of prior information to supplement short-term memory.

LTS condenses and summarizes information that has moved outside the STM window.

Together, the STM and LTS are combined into an “Agent Summary” that gets passed to the language model, providing the agent with both recent and earlier information relevant to the goal.

How does Agent Summary work?

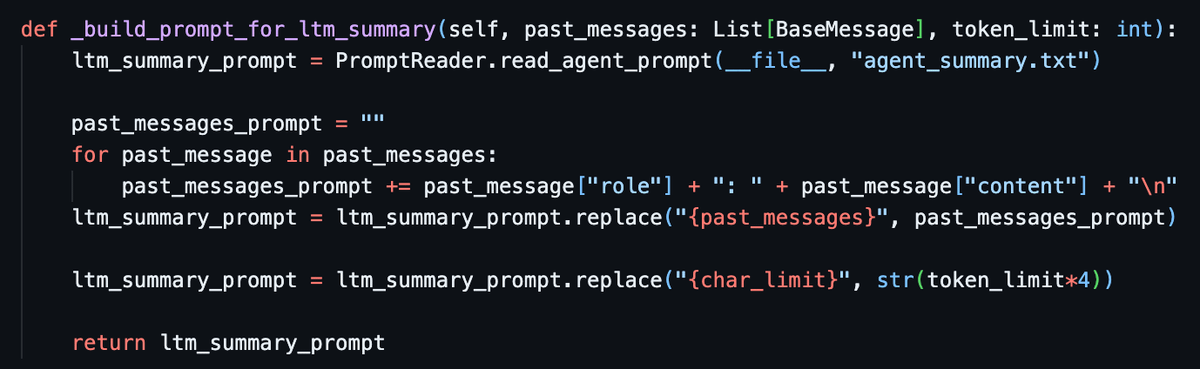

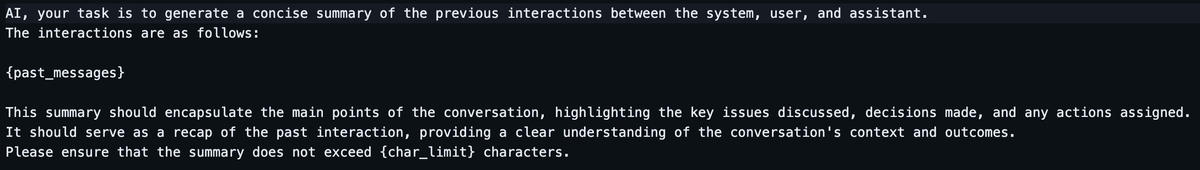

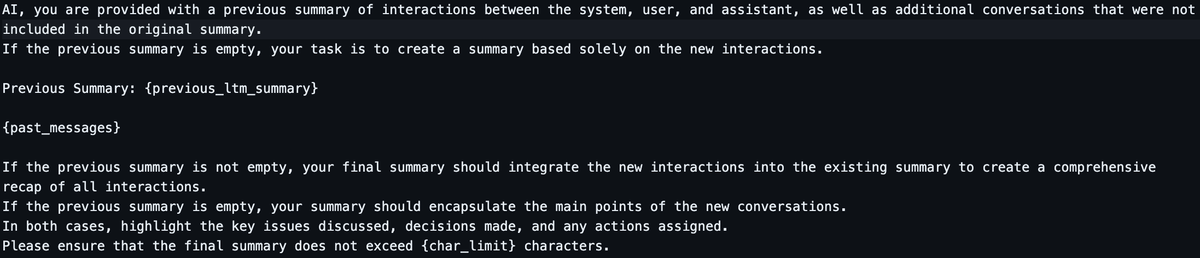

The “_build_prompt_for_ltm_summary” function is used to generate a concise summary of the previous agent iterations.

It encapsulates the key points, highlighting the key issues, decisions made, and any actions assigned.

The function takes a list of past messages and a token limit as input.

It reads a prompt from a text file, replaces placeholders with the past messages and the character limit (which is four times the token limit), and returns the final prompt.

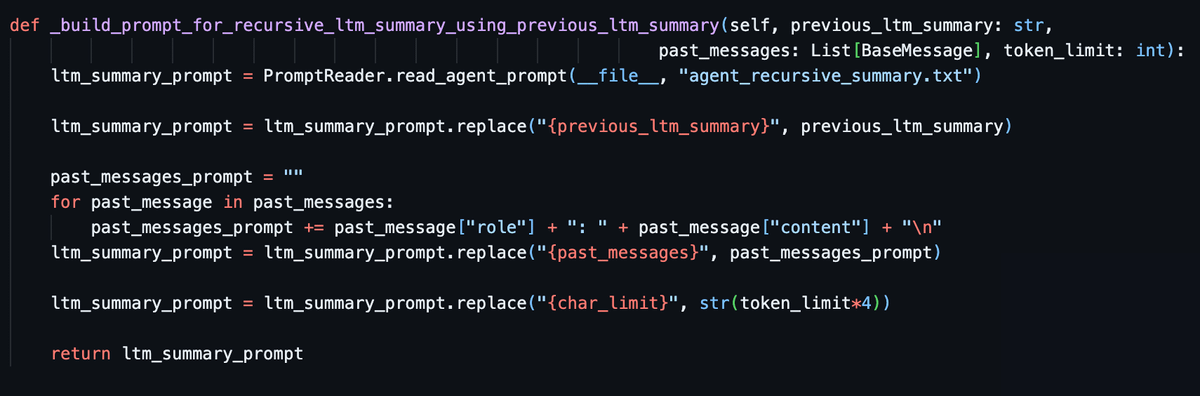

The “_build_prompt_for_recursive_ltm_summary_using_previous_ltm_summary” function, on the other hand, is used when there is a previous summary of interactions and additional conversations that were not included in the original summary.

This function takes a previous long-term summary, a list of past messages, and a token limit as input. It reads a prompt from a text file, replaces placeholders with the previous summary, the past messages, and the character limit, and returns the final prompt.

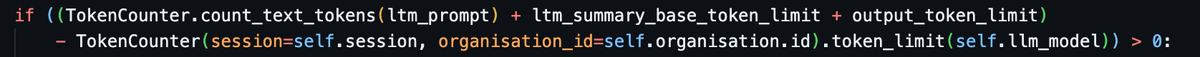

The “_build_prompt_for_recursive_ltm_summary_using_previous_ltm_summary” function is used over the “_build_prompt_for_ltm_summary” function when the token count of the LTM prompt, the base token limit for the LTS, and the output token limit exceeds the LLM token limit.

This ensures that the final prompt of the agent summary does not exceed the token limit of the language model, while still encapsulating the key highlights of the new iterations and integrating them into the existing summary.

Balancing Short-Term and Long-Term Memory

In the current implementation, STM is weighted at 75% and LTS at 25% in the Agent Summary context. The higher weightage for STM allows agents to focus on recent information within a specified timeframe. This enables them to process immediate data in real-time without being overwhelmed by an excessive amount of historical information.

Early results show Agent Summaries improving goal completion and reducing disjointed responses. We look forward to further testing and optimizations of this dual memory approach as we enhance SuperAGI agents.

View Agent Summary Benchmarks here